As we sit here in the 21st century, It’s hard to imagine a time when computers weren’t an integral part of our daily lives. After all, we carry them in our pockets, wear them on our wrists, and have them seamlessly integrated with our homes and workplaces.

However, there was a time, not so long ago, when computers were far less powerful, much larger, and much more expensive. That changed in the 1990s with the microchip revolution.

Today we will explore the impact that the microchip had on computers during the 1990s, including its effects on computing power, size, and cost, as well as its role in shaping the digital world in which we live today. So,

How Did The Microchip Change Computers During The 1990s?

The microchip changed computers during the 1990s by making them faster, smaller, and more affordable, with the newfound ability to pack millions of electronic transistors onto a single silicon chip,

The computer industry was able to achieve increases in computing power that were previously impossible, leading to the rapid development of more sophisticated software and complex applications.

Computers became much smaller than ever before, and as such became more portable and far more convenient to use. This resulted in much lower manufacturing costs and computers became cheaper and more accessible to the general public than ever.

Overall, the microchip played an integral role in shaping the computer revolution throughout the 90s and laid the foundation for the digital world that continues to evolve to this day.

So, let’s take a closer look at the far-reaching impact that microchip technology had on 90s computing.

Before The Microchip

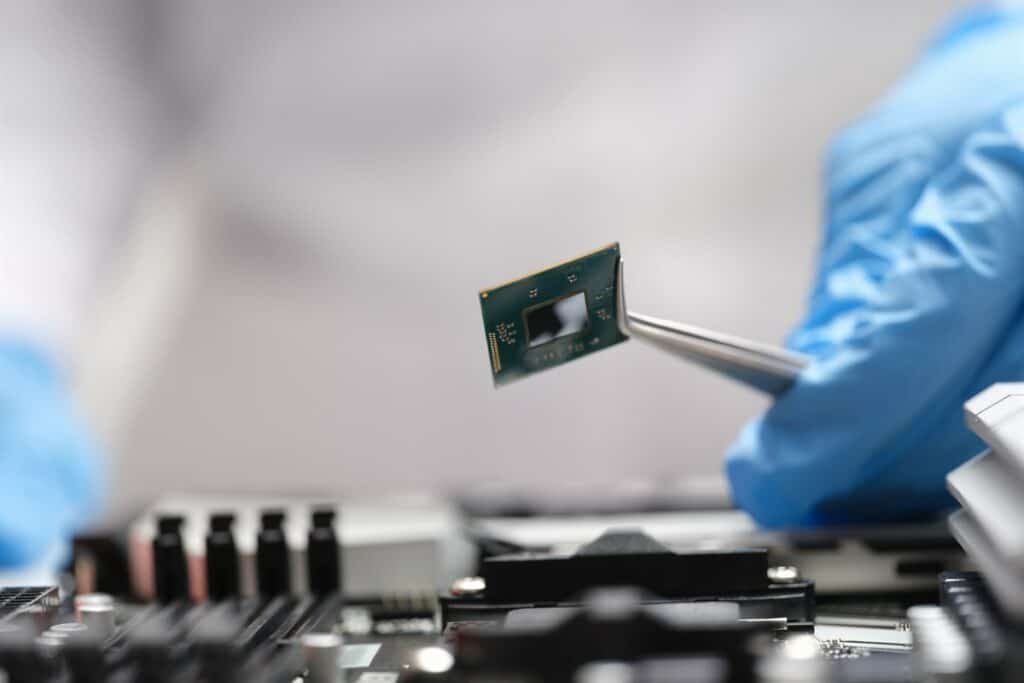

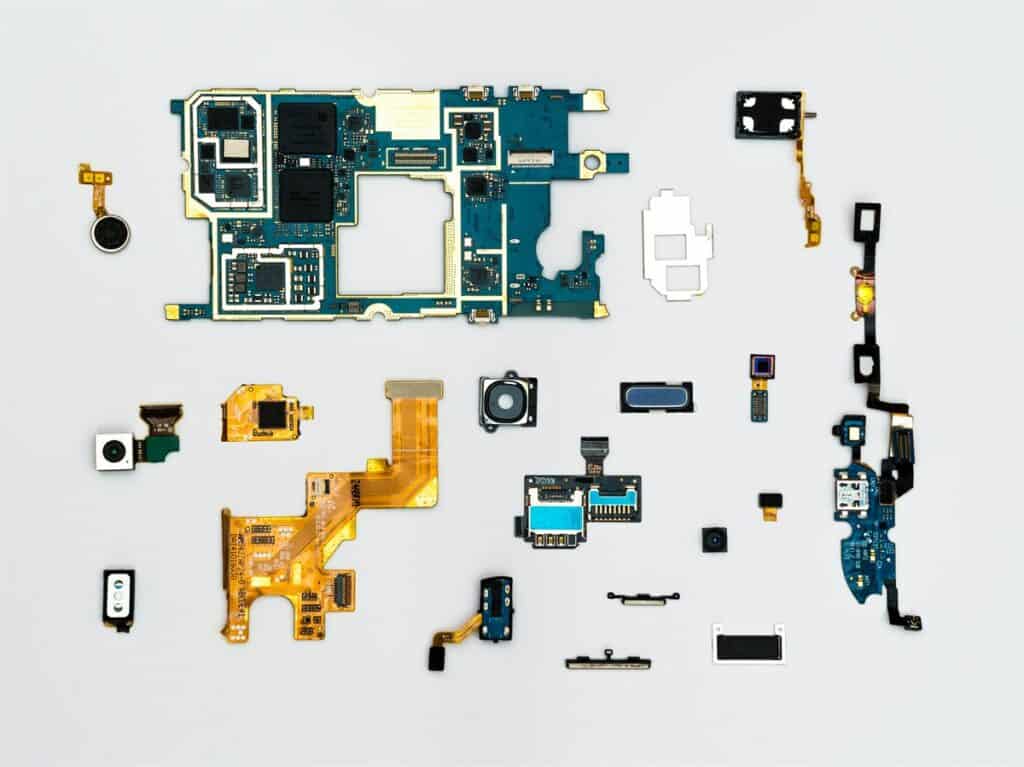

The integrated circuit, more commonly known as the microchip, is a small electronic component containing many transistors, capacitors, and resistors on a single piece of semiconducting silicon.

While originally in the late 1950s, its true potential was not introduced until the 1990s when it revolutionized computing.

Before the 90s microprocessor revolution, computers were clunky, expensive, and not very powerful machines. Believe it or not, the very first computers took up entire rooms and could only handle a few thousand processes per second, which may sound like a lot but isn’t.

To put into perspective the computing power of computers before the advent of the microchip, consumer-grade processors of today are now measured in gigahertz (GHz), which represents billions of cycles per second. In other words, modern-day computers are millions of times more powerful than their predecessors, thanks to the invention and integration of microchips.

How The Microchip Changed Everything

In the 1990s, the microchip changed everything. With lightning-fast advances in processing technology, computers became smaller, more powerful, and significantly more affordable than ever before.

The development of microchips in the 1990s enabled computers to perform tasks that were previously impossible, which had a significant impact on the world and the average consumer.

Smaller Circuits

The microchips of the 90s allowed for rapid miniaturization of the most essential computer components, making it feasible for manufacturers to create more powerful computers that were smaller and more portable than ever before.

This led to the development of small home computers and even laptops, which were significantly easier to carry around. The microchips developed in the 90s gave us “computing on the go” and paved the way for the modern-day revolution of smartphones and communication devices.

More Powerful Computing

The microchips of the 90s also made computers more efficient by increasing their processing power and computational capacity.

As a direct result of this increased processing power, computers in the 90s started to handle far more complex tasks and run significantly more advanced software all while using less power. This led to the development of a ton of new programs and helpful software that provided consumers with unexpected versatility.

All of a sudden people started to use computers for new and exciting purposes.

More Connected Than Ever

Moreover, the microchips developed in the 90s made it possible to connect computers into vast and complex networks, allowing for the quick and easy sharing of information and resources between a large number of computers. This led to the development and lightning-fast growth of the Internet, which transformed the way people communicate with one another.

Small but Mighty: The Unprecedented Impact of Microchips on 90s Computing

For those of us who grew up in the 1990s, the microchip represents a time of unprecedented change and blistering progress. I still remember the excitement of getting my very first home computer (a cow-spotted Gateway model) and the thrill of discovering all the things it could do. And who can forget the sound of a dial-up modem connecting to the internet, or the frustration of waiting for image-heavy web pages to load?

Looking back, it’s clear that the microchip changed everything. It made computers faster, smaller, and more affordable, it also made them more accessible, more powerful, and more interconnected.

The 90s microchip allowed us to connect with each other in ways that were unimaginable just a few years before. And it set the stage for the digital world that we are all living in today.

The microchip was a game-changer for the computer industry in the 1990s. So the next time you use your computer, smartphone, or any other modern-day electronic device, take a moment to appreciate the humble 90s microchip that made changed the world.